From Tracking to Winning

“We’ve been tracking that opportunity for a year.”

That’s one of the most common phrases we hear in the federal market. But when we dig deeper, “tracking” usually means:

· The company is aware of the opportunity

· It’s in their pipeline

· They’re following public updates—but haven’t done the work to position to win

They’re present, but not proactive.

This gap is rarely about intent—it’s about capacity, capability, and consistency.

Why Companies Get Stuck

Small and mid-tier contractors face several growth hurdles:

· Immature or nonexistent BD processes

· Inexperienced or overextended leadership

· Lack of bandwidth to pursue top priorities effectively

· Difficulty qualifying and prioritizing the best-fit targets

Sometimes it starts at the top—with a founder who’s technically brilliant but hasn’t built or scaled a growth engine. Other times, even seasoned mid-tier companies get pulled in too many directions, chasing too many opportunities with too few qualified resources.

Hiring Isn’t Always the Right Answer

Hiring full-time BD or capture talent can seem like the next step—but it’s risky.

Finding the right person takes time. Making the wrong hire is costly—not just in salary and onboarding, but in missed revenue, reduced morale, and delayed action. A 12–24 month misstep can mean millions in lost opportunity.

That’s why more companies are turning to fractional growth support.

What Is Fractional Growth Support?

Fractional support means experienced growth practitioners embedded with your team on a part-time, right-sized basis. These practitioners provide both strategic direction and hands-on execution, accelerating your growth without the overhead of a full-time hire.

At DWPA, we deliver fractional leadership across every phase of federal business development:

🔹 Fractional Chief Growth Officer (CGO)

· Define and operationalize your growth strategy

· Guide your most strategic pursuits

· Improve solution differentiation and win strategy

· Set and track key success metrics

· Partner with your CEO to recommend course corrections

· Connect you with DWPA’s deep SME bench to sharpen pursuit strategies

🔹 Fractional Capture Manager

· Lead or support targeted pursuits

· Mentor your internal capture and proposal team

· Strengthen customer messaging and competitive positioning

· Improve win readiness at every stage

🔹 Fractional Business Development Operations Lead

· Manage and mature your pipeline

· Run pipeline meetings and opportunity reviews

· Drive early-stage qualification and prioritization

· Coordinate across internal and external BD resources

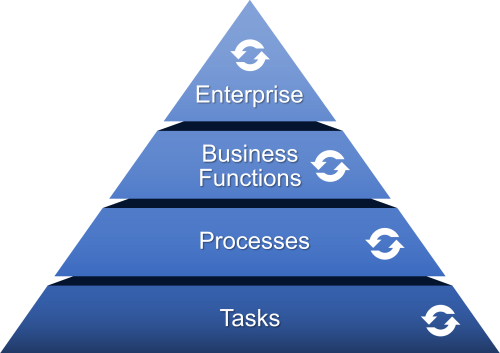

· Ensure each opportunity moves through the BD lifecycle effectively

Powered by Deep Market Expertise

DWPA’s fractional practitioners don’t operate in a vacuum—they plug into a network of over 500 former senior government executives across Defense, Intelligence, Civilian, Homeland Security, Health, and Space agencies.

This deep agency insight helps our clients:

· Understand mission and acquisition priorities

· Adapt to policy changes like FAR reform and GSA consolidation

· Navigate new buying models (OTA, CSO, SBIR)

· Position early and influence effectively

We don’t just support—we help you outsmart the competition.

Why This Matters Right Now

Today’s federal market is undergoing fundamental shifts:

· Shrinking government workforce

· AI and automation transforming workflows

· Greater scrutiny on price, performance, and value

· Consolidated acquisition channels

· Tighter alignment with commercial-first strategies

Now more than ever, companies need an adaptive, disciplined go-to-market strategy that reflects what agencies are buying, how they’re buying, and what success looks like on their side of the table.

The Results Speak for Themselves

Clients that leverage DWPA’s fractional growth solutions report:

· More focused pipelines

· Greater customer intimacy

· Stronger solution alignment and messaging

· Improved proposal quality

· Higher win rates

And perhaps most importantly, they develop the internal muscle to sustain growth long after the engagement ends. We don’t just fill a gap—we build your capability.

Build Smarter, Grow Faster

Our goal isn’t to be a permanent fixture. It’s to help you build a sustainable, scalable growth engine—blending your internal team with the right external support to get there faster and more confidently.

Fractional doesn’t mean temporary—it means strategic, efficient, and effective.

If you’re ready to stop tracking and start winning, we’re ready to help.

Let’s Talk

Interested in how fractional growth support could work for your team? Reach out to Mike Mullen, SVP and GM of our Small & Transitional Business Segment – mike.mullen@dwpassociates.com.